June 4, 2017

Researchers from Princeton University's Department of Computer Science have come up with a way to help robots and other machines get a clearer picture of their surroundings in three dimensions.

If a robot needs to clean a room, for example, it needs to know where it can move in order to navigate around the space. It also needs to identify different objects in the room so it can make make the bed or put away the dishes.

The researchers have come up with a way for the robot to understand both at the same time.

To do this, the researchers have developed a method of producing a complete three-dimensional representation of a scene, along with labels for the scene's objects, from a single two-dimensional image. They refer to this process as semantic scene completion.

"A robot needs to understand two levels of information," explained Shuran Song, the lead author of the researchers' paper, which is scheduled for presentation at the CVPR conference in Hawaii in July. The first level of information helps the robot interact with its surroundings: it can identify open space for movement, and locate objects to manipulate. The second and higher level of information allows the robot to understand what an object is so it can be used to perform certain tasks.

According to Song and her colleagues, previous work aimed at improving robot comprehension of the visual world focused on these two levels – scene completion and object labeling – separately. But the Princeton team realized that the two functions are more successful when tackled together.

In the real world, robots have to identify objects from partial observations. Even if the robot's view of a chair is partly blocked by a table, the robot still needs to recognize the object as a chair and understand that it has four legs and sits on the floor. The researchers realized this was much easier if the robot were able to combine its basic recognition of a chair-like shape with the layout of the room: the shape next to a desk is highly likely to be a chair.

"The algorithm works better when both tasks reinforce each other," said Song, a Ph.D. student in computer science at Princeton.

Based on this insight, the researchers created a software system, called SSCNet, that could use algorithm to teach a robot how to recognize objects and complete the 3-dimensional structure of a scene.

Starting with an image taken with a Kinect camera, the network encodes it as a three-dimensional space. The system then uses a machine-learning model to learn about the space by using three-dimensional contextual information: for example, helping the robot recognize a partially-hidden chair.

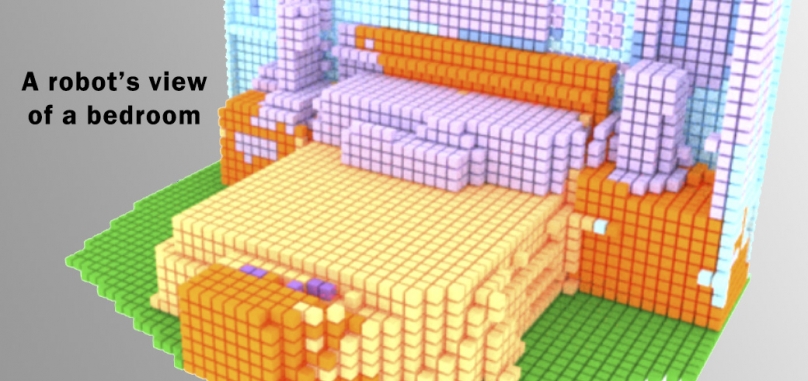

At the end of the process, the network creates a three-dimensional image of the scene by dividing it into small sections called voxels – just as pixels are the tiny elements of a two-dimensional image, voxels (volumetric pixels) are the building blocks of a 3D image. Each voxel in the image, including those hidden from the camera's direct view, are labeled as occupied by an object category or by empty space.

"For a long time we've realized that accomplishing many seemingly simple tasks in image processing really can't be done by looking at small areas in isolation. It requires understanding the entire scene and what objects are within it," said Szymon Rusinkiewicz, a Princeton computer science professor who focuses on the interface between computers and the real world. Though Rusinkiewicz wasn't involved in the SSCNet project, he believes it is significant because it links the tasks of understanding a 3D scene and filling holes in 3D data to improve system performance.

Training problem solved

Machine learning works best when the computer can analyze large amounts of data. But there was practical no real-world data that fits all the researchers' needs: labeling of objects, full of structure of their and their positions within the scene. So the researchers turned to a library of images created by online users working on room designs.

The researchers used these synthetic images to create a dataset that could be used by the computer. The effort continues, but their dataset, called SUNCG, currently contains over 45,000 densely labeled scenes with realistic room and furniture arrangements.

The team's network trained itself using the 45,000 examples until it could accurately perceive the spatial layout of new scenes and identify the objects they contained. This training significantly improved the network's ability to analyze new scenes, the researchers report. In fact, experiments conducted by the team show that its method of jointly determining the space occupied by objects in a scene and labeling those objects outperforms methods of handling either task in isolation.

On the downside, the network does not use color information, which can make it harder for the system to identify objects lacking significant depth (such as windows) and distinguish between different objects with similar geometries. In addition, processor memory constraints result in relatively low detail for the information it produces about geometry, causing the network to sometimes miss small objects.

In addition to robots, the joint-identification approach could improve the performance of a variety of devices that assist people, said Thomas Funkhouser, a professor of computer science at Princeton and the project's principal investigator. "For any device that's trying to understand the environment it's in and how to interact with that environment, understanding the 3D composition of a scene is very important."

Besides Funkhouser and Song, the authors include: Angel Chang and Manolis Savva, postdoctoral researchers in computer science at Princeton; Fisher Yu and Andy Zeng, graduate students in computer science at Princeton. Support for the research was provided in part by Intel, Adobe, NVIDIA, Facebook and the National Science Foundation.